In the healthcare industry, technological advancements have significantly transformed the way medical professionals handle patient data. One noteworthy innovation that has gained attention is the utilization of a transcription tool powered by an OpenAI model known for its tendency towards generating hallucination-prone text.

The transcription tool, developed by a collaborative effort between healthcare providers and tech experts, harnesses the capabilities of OpenAI’s language model to convert spoken notes from medical professionals into written text. By using sophisticated artificial intelligence algorithms, the tool aims to streamline the documentation process in hospitals, improving accuracy and efficiency in capturing patient information.

However, the decision to implement a transcription tool powered by an OpenAI model known for its hallucination-prone tendencies raises ethical considerations and concerns regarding patient data privacy and accuracy. While the technology offers impressive benefits in terms of automating transcription tasks and reducing the burden on healthcare professionals, there are potential risks associated with using a model that is susceptible to generating erroneous or misleading information.

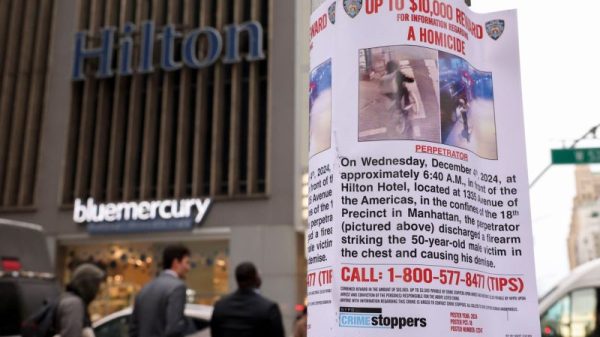

One key challenge posed by the utilization of such a transcription tool is the potential for inaccuracies in the transcribed medical notes. Given the hallucination-prone nature of the OpenAI model, there is a risk of misleading information being recorded, which could have serious implications for patient care and treatment decisions. Inaccurate or incomplete medical documentation could lead to misdiagnoses, inappropriate treatments, and compromised patient safety.

Moreover, concerns regarding patient data privacy and security come to the forefront when sensitive medical information is processed through an AI model that exhibits hallucination-prone behaviors. Healthcare organizations must ensure stringent data protection measures are in place to safeguard patient confidentiality and comply with regulatory requirements such as HIPAA.

To address these challenges, healthcare providers employing the transcription tool must implement robust quality assurance processes to verify the accuracy of transcribed medical notes. Human oversight and review by qualified medical professionals are crucial in detecting and correcting any misleading or erroneous information generated by the AI model. Training the model on specific medical terminology and context can also help mitigate the risk of hallucination-prone outputs.

Furthermore, transparent communication with patients regarding the use of AI-powered transcription tools and the measures in place to protect their data is essential to build trust and maintain ethical standards in healthcare practices. Patients have the right to know how their information is being processed and the steps taken to ensure its accuracy and confidentiality.

In conclusion, while the adoption of transcription tools powered by advanced AI models offers significant benefits in terms of efficiency and productivity in healthcare settings, careful consideration must be given to the ethical implications and risks associated with utilizing technologies prone to generating hallucination-prone text. By implementing stringent quality control measures, maintaining data privacy standards, and fostering transparency with patients, healthcare organizations can harness the capabilities of AI tools responsibly and effectively in improving patient care delivery.